While our world is run ragged with the horrible—from political murders to the unending wars in Ukraine and Gaza, to the rickety economy, all to the point where a school shooting in this country hardly gets any attention anymore—it’s understandable that we might not notice something unprecedented is happening all around us. But it’s something CEOs in particular need to pay attention to and cannot afford to be distracted about.

That thought first came to me over the summer at our first-annual AI Leadership Summit, hosted in the lower Manhattan offices of law firm Freshfields. There was lots of smart insight, lots of really meaty, engaging conversation. But my biggest takeaway from the event came from Florin Rotar, CTO at Atos and former Chief AI Officer at Avanade, who asked: “What will it mean to your business when the cost of cognition goes to zero?”

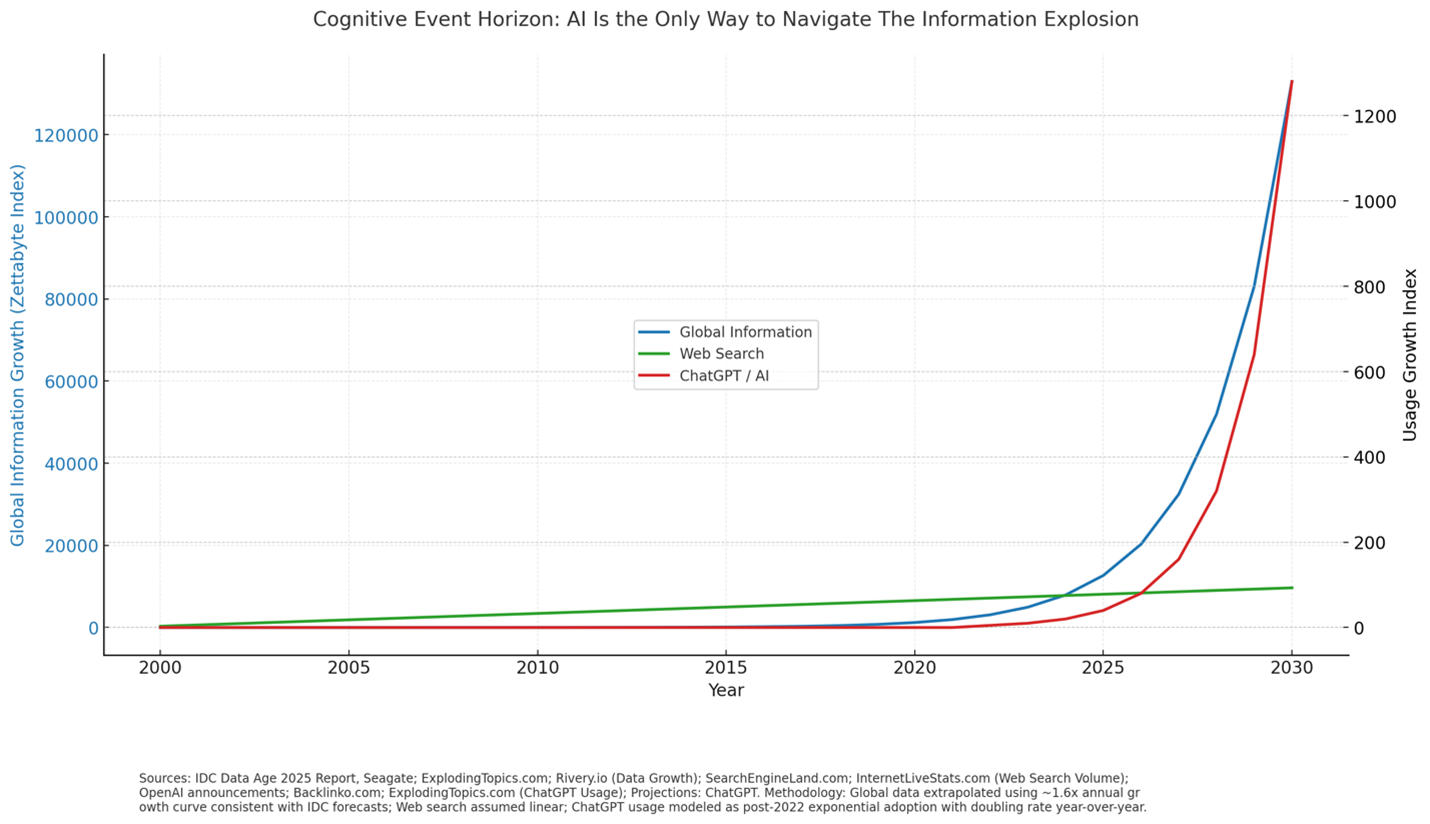

I haven’t been able to shake his question since. Consider this: In 2000, the total amount of digital data in the world was roughly 2 exabytes. By the end of this year, it’ll be north of 180 zettabytes. It’s not slowing down. In fact, the rate of creation is accelerating. The amount of information in the world is growing so fast, and so chaotically, that traditional ways of navigating it—search engines, folders, filters, even teams of analysts—are no longer sufficient. It is very akin to what Ray Kurzweil had in mind with “the Singularity” (see graphic above).

There’s a moment—just before a black hole—where even light can’t escape. Physicists call it the event horizon. In business today, I think we’re approaching a cognitive event horizon. A point where there’s so much information moving so fast, no unaided human can reasonably keep up. And in this moment AI becomes not a productivity tool, but an interface layer—a translator, a navigator, a thought partner. Without it, we drown. With it, we at least have a shot at staying afloat. AI is not an app. It’s not a piece of software. It’s becoming a new layer of human cognition.

I think we’re nearing that moment quickly, with huge implications for business and society. That could explain—probably explains—why ChatGPT hit 100 million users in two months. For so many of us AI isn’t just about productivity. It’s about survival. To navigate an exponentially more complex world, we need help, and we’re turning to AI.

But here’s the twist: AI isn’t just helping us navigate this information overload—it’s also accelerating it. Every time an LLM writes a paragraph, it creates new content. Every time we use AI to generate a slide, summarize a meeting, write an email or build a model, we’re adding to the pile—more tokens, more documents, more data trails.

The result is a feedback loop. The more we use AI to deal with complexity, the more complexity we create. This isn’t a vicious cycle. It’s something stranger—and more powerful. It’s recursive. Self-reinforcing. I’m not talking about artificial general intelligence. All of this is happening not because machines are becoming conscious, but because they’re becoming essential.

So what does this mean for business? Honestly, I have no idea—I’m not sure anyone does. But it does mean a leadership mindset shift is likely in order. Instead of poking about this as a “Should we explore AI?” moment, perhaps we need to shift to “How do we help our people think with AI?” Some questions for the team:

- Are our competitive moats based on “proprietary ideas”? If so, how might “cognitive costs going to zero” change that strategic advantage or even our reason for being in business?

- What could our company do if every single person had an executive assistant/thinking partner/coach to do their jobs better? How will we operate versus rivals if everyone in our shop does not work this way?

- If the cost of intelligence drops to near zero, what happens to how we structure roles, reviews, reporting lines? Are we designing our work for a world of scarcity or a world of cognitive abundance?

- Where in our company are people drowning in complexity—not just workload—right now? Can AI help?

- What happens to trust and decision-making when machines increasingly become our sense-makers? If every insight is AI-generated or AI-intermediated, how do we maintain confidence and alignment across the business?

- How do we train people not just to use AI—but to think with it? Are we preparing our teams not just to get faster answers, but to ask better questions?

- Where are we underestimating the second-order effects of AI acceleration? We’re solving for today’s complexity—but what complexity are we creating in the process?

As I said, I don’t have the answers for any of these and there’s a pretty good chance that no one on your team does, either. But questions like these—perhaps asked of both your people and your AI, together—are probably a good place to start a very interesting conversation. ASAP.